Alexander Kolesnikov

Staff Researcher Engineer at Google Deepmind.

I am a machine learning researcher, with extensive expierence in computer vision and natural language processing.

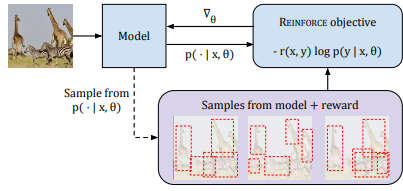

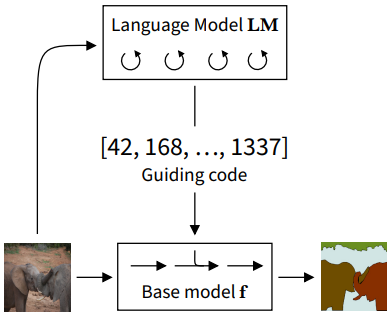

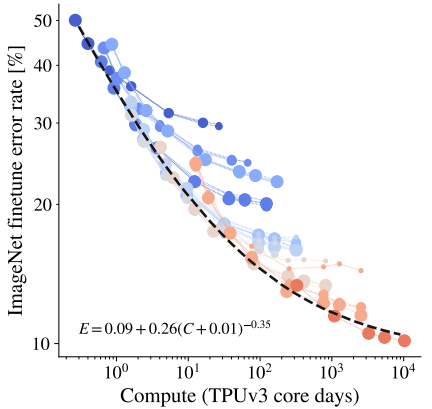

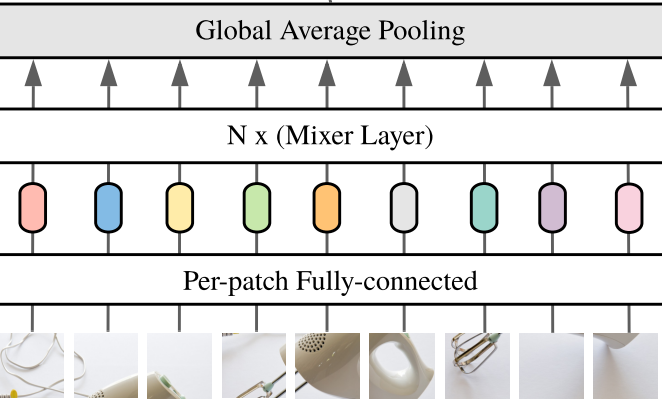

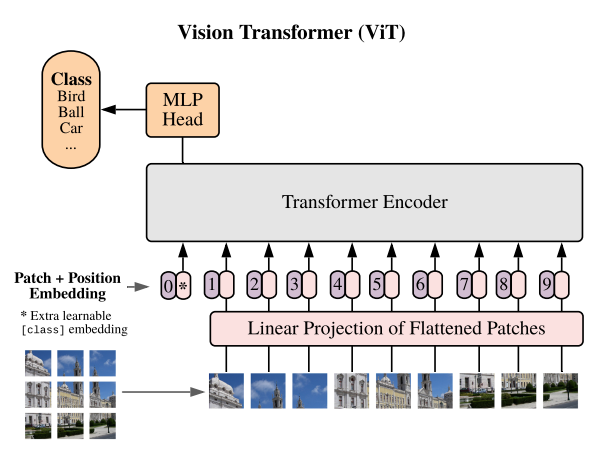

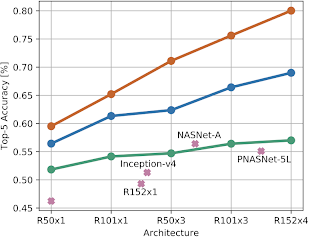

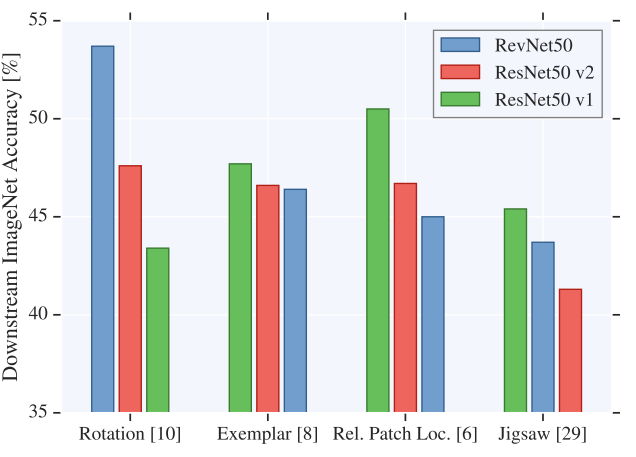

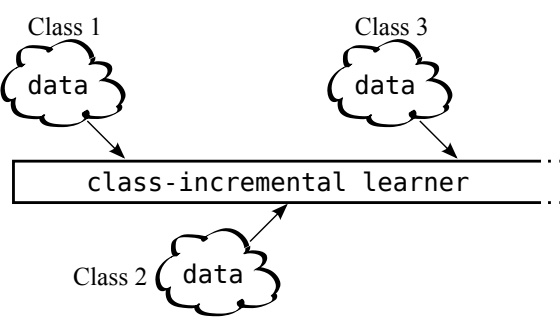

My research projects span topics such as neural architectures, large-scale representation learning and models, transfer learing, generative modeling, reinforcement learning and beyond. I strive to simplify things, make them end-to-end learnable, as oppsoed to introducing new components. See my papers below to see examples of projects that have this flavour.

I also spent significant chunk of my time on designing and coding flexible, but powerful research infrascture. I firmly believe that good infrasture is a necesary condition for a sustainable and high-quality research output.