title = {Gemma 3 technical report},

author = {Team, Gemma},

journal = {arXiv preprint arXiv:2503.19786},

year = {2025}

}

Alexander Kolesnikov

Member of Technical Staff at OpenAI

I am a machine learning researcher focused on advancing deep learning, with a particular interest in multimodal intelligence. Currently, I am working as a member of technical staff at OpenAI, where I contribute to multimodal AI research.

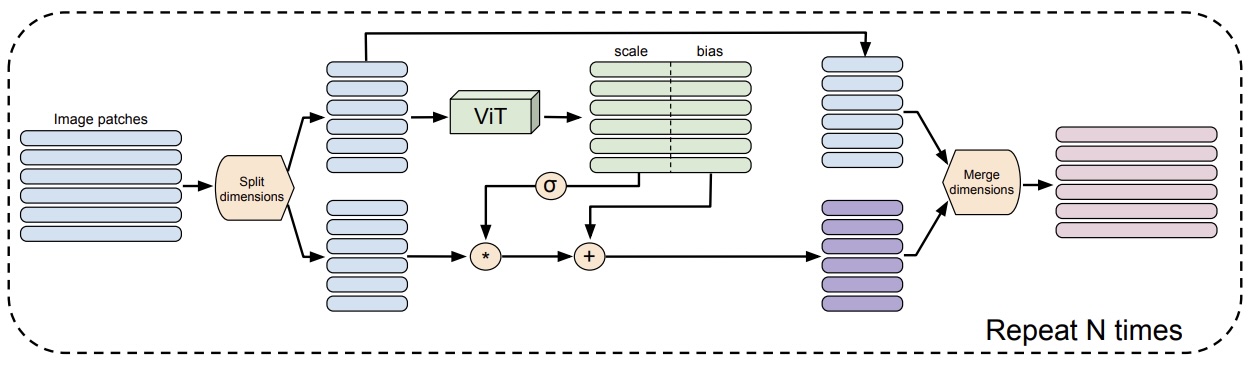

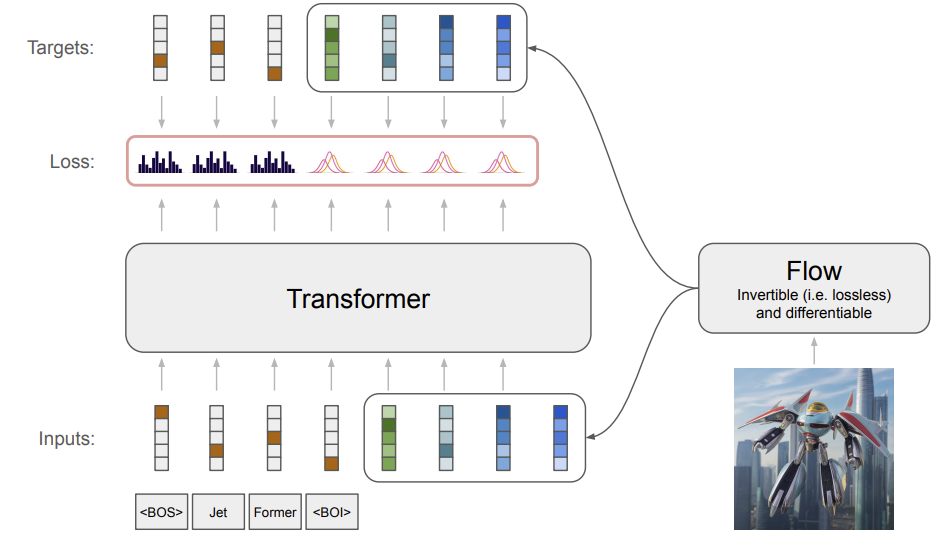

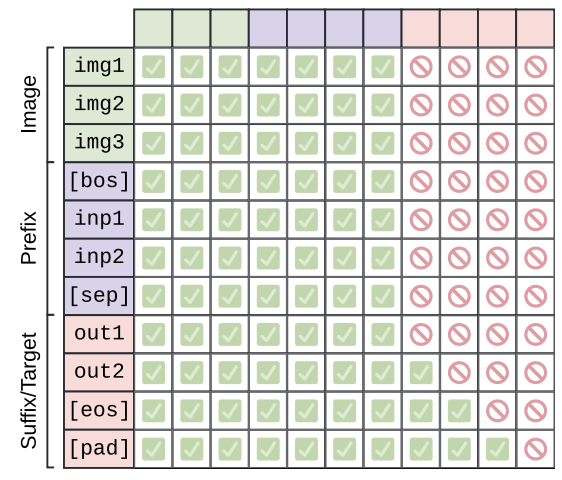

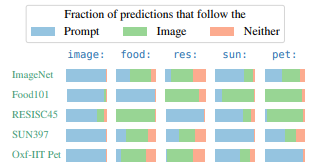

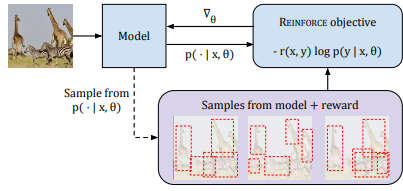

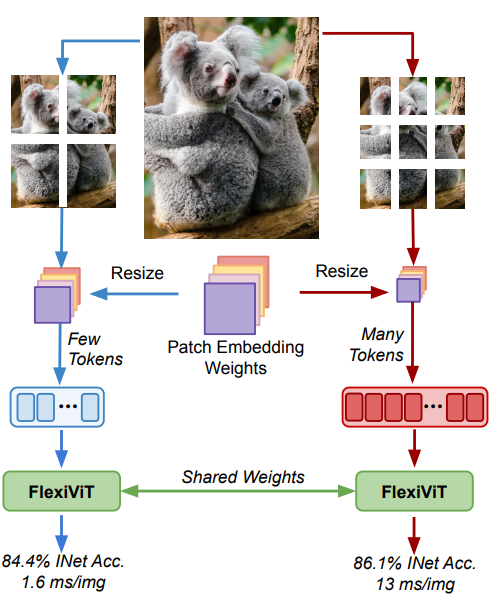

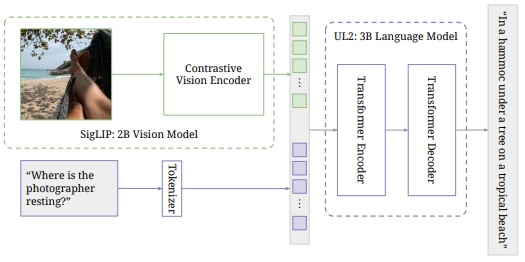

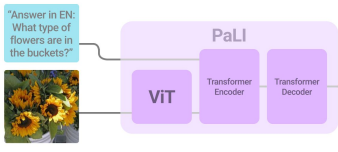

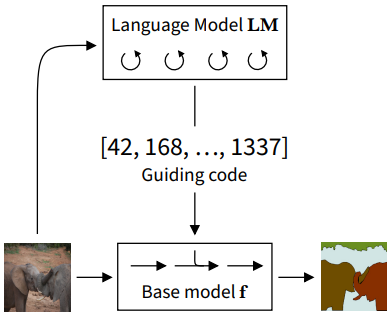

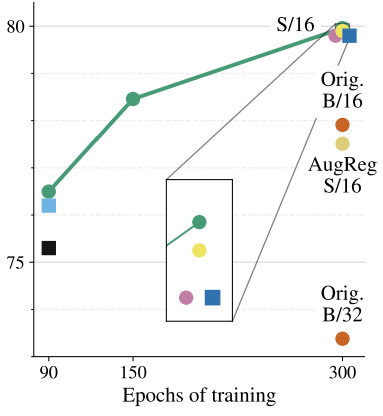

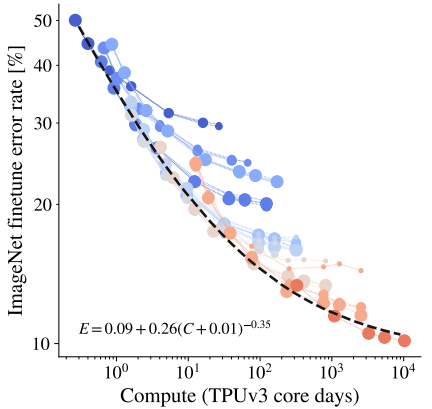

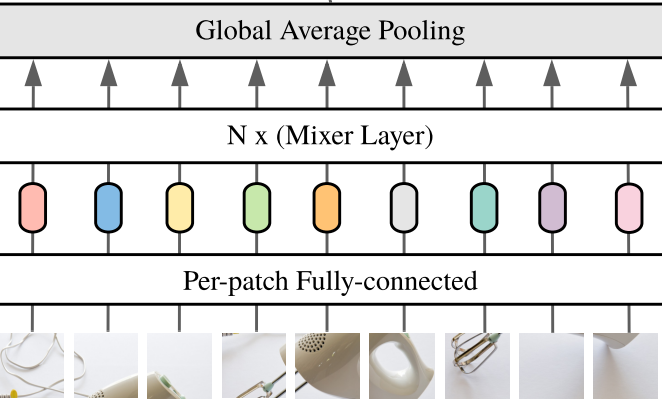

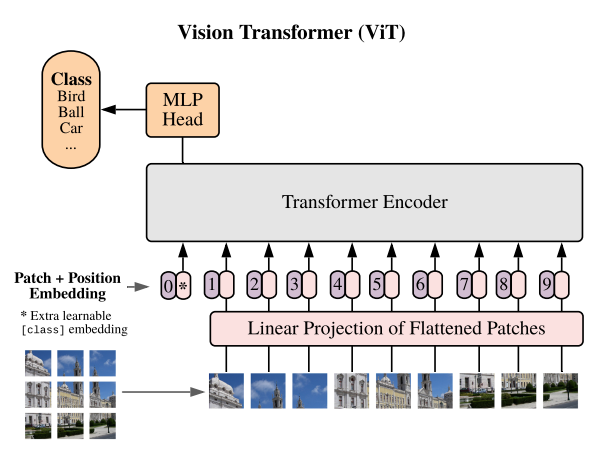

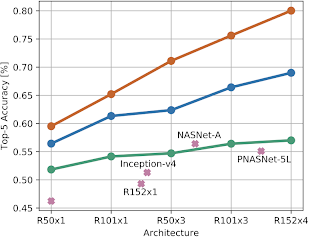

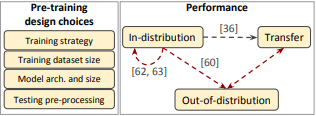

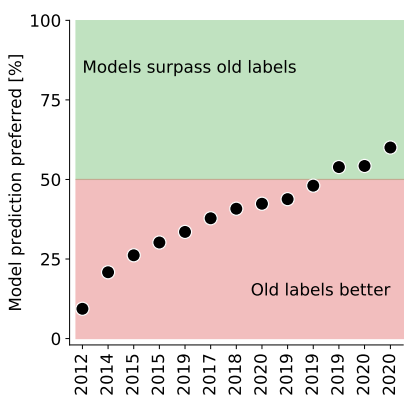

Throughout my career, I've had the opportunity to work on various aspects of computer vision and machine learning. This includes contributing to vision model development (ImageNet state-of-the-art results in 2019, 2020, and 2021) and developing open models like SigLIP and PaliGemma. I've also worked on neural architectures including BiT, ViT, MLP-Mixer, and FlexiViT. My recent work has focused on making multimodal deep learning more accessible and scalable through projects like UViM, Vision with Rewards, and JetFormer.

I enjoy developing efficient research infrastructure, particularly using Jax. Some of this work is available in the open-source big_vision repository.

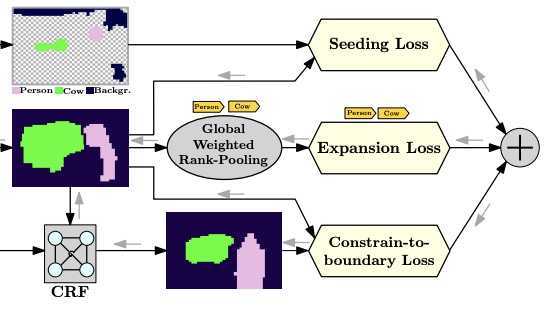

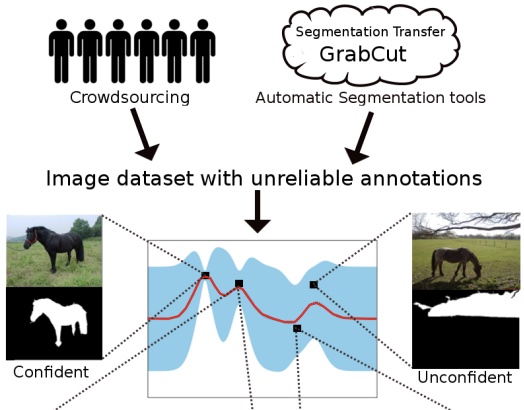

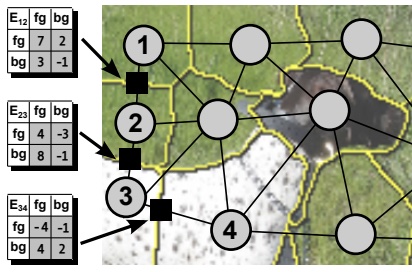

Before joining OpenAI, I was at Google Brain (now Google DeepMind). I completed my PhD at ISTA under Christoph Lampert's supervision, where I studied weakly-supervised learning and generative image models.

You can reach me at a@kolesnikov.ch.